The End of Shouting: Prompts as Programs in GPT-5

Why does a prompt that works brilliantly with Claude suddenly break on GPT-5? And since when do we have to write prompts like rule-based programs? How do we enforce “creativity” when Marketing pushes for it?

The answer is a fundamental paradigm shift: GPT-5 with reasoning (GPT-5 Thinking) follows instructions with a precision we haven’t seen before (GPT-4.1 was the exception, but lacked reasoning). What sounds like a feature flips established prompt-engineering practices on its head. The era of “shouting”—ALL CAPS, exclamation marks, redundant pleading—is over. Clear rules, explicit policies, and structured instructions now rule the day.

Note: This article refers to the reasoning model “GPT-5 Thinking.” The “GPT-5 Instant” model without explicit reasoning behaves differently in some respects.

From Vibes to Rules

If you’ve been working with Claude < 4.5, GPT-4o, Gemini 2.5, or similar models, you know the pattern: you write prompts with lots of “feel,” repeat key instructions, and use all caps letters and exclamation points to “add emphasis.”

That “shouting” was a rational response to a behavior pattern: earlier models interpreted prompts in a far more vibe-driven way. They got the general direction but were easily derailed by nuances, subordinate clauses, or competing instructions. Prompt engineering became the art of redundancy and amplification.

GPT-4.1 already showed much better instruction following, but most of us barely had time to adapt—then GPT-5 landed. And GPT-5 changes everything. Eric Zakariasson from Cursor sums it up:

"GPT-5 is one of the most steerable models I've used... When I was more specific, I was surprised by how smart the model was." cursor.com

In practice, this “steerability” means: every syllable counts. Every subordinate clause is honored. Every rule is followed. Nate B Jones puts it plainly in his Prompting Guide to GPT-5:

"This is a speedboat with a really big rudder. This model wants to go fast and it wants to be steered really, really hard. But most people's prompts are not in a place where we can effectively steer that model."

This is an inflection point. Prompts are no longer wish lists or hints—they’re executable policies.

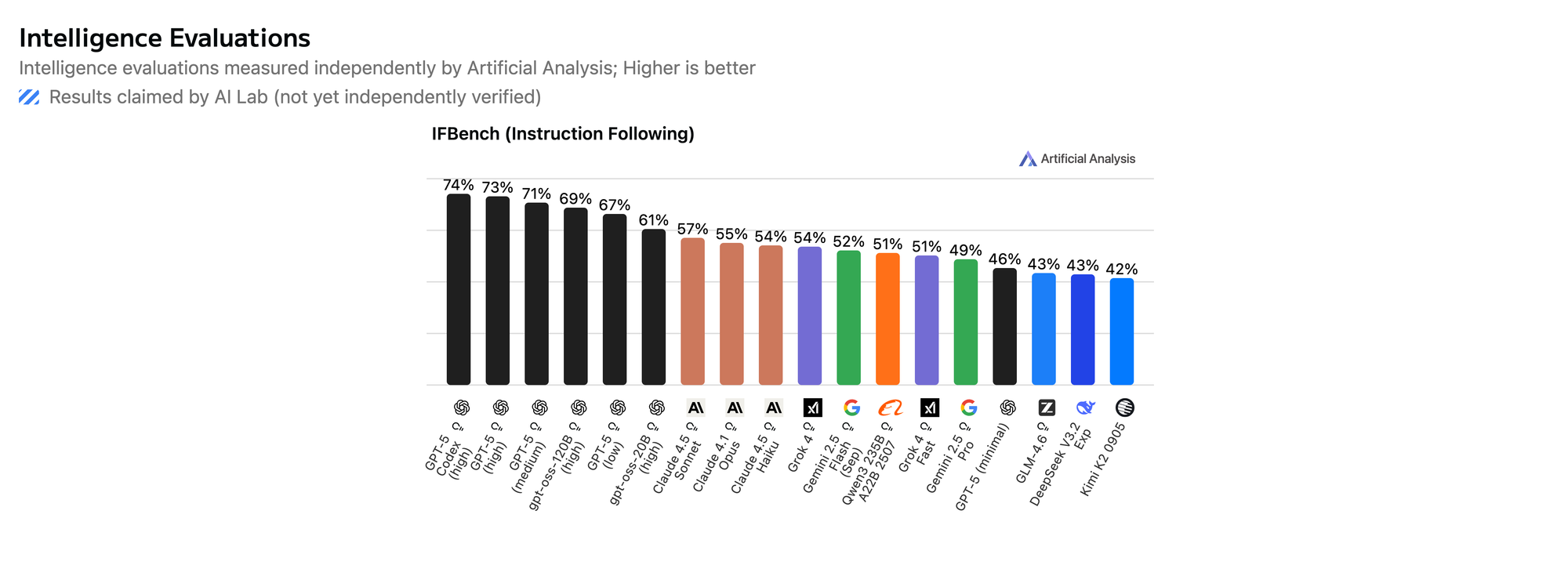

Instruction Following in Benchmarks

Instruction-following can be quantified. Artificial Analysis evaluates current frontier models with IFBench, a standardized benchmark for rule adherence. The results show clear generational differences:

The GPT-5 family tops the chart with 61% to 74%. For comparison: Claude models hit 54–57%, Gemini comes in at 52–54%, Grok at 51%. The gap may sound modest—but in practice it means GPT-5 deviates far less from complex, nested rule sets.

The spread within the GPT-5 variants is instructive. The “minimal” variant is predictably lower (not in the top tier), while optimized variants consistently stay above 67%. That reflects semantic parameters: reasoning_effort and verbosity affect not just output style but also instruction-following accuracy.

Despite lower IFBench scores, Claude remains strong in practice—developers report high reliability in multi-turn workflows and tool orchestration. The profiles diverge: GPT-5 adheres more precisely to granular, nested rules; Claude often delivers “first-try success” on standard scenarios.

The benchmark numbers confirm it: GPT-5 sets a new bar for rule-based steerability. That has consequences for anyone building complex prompt systems.

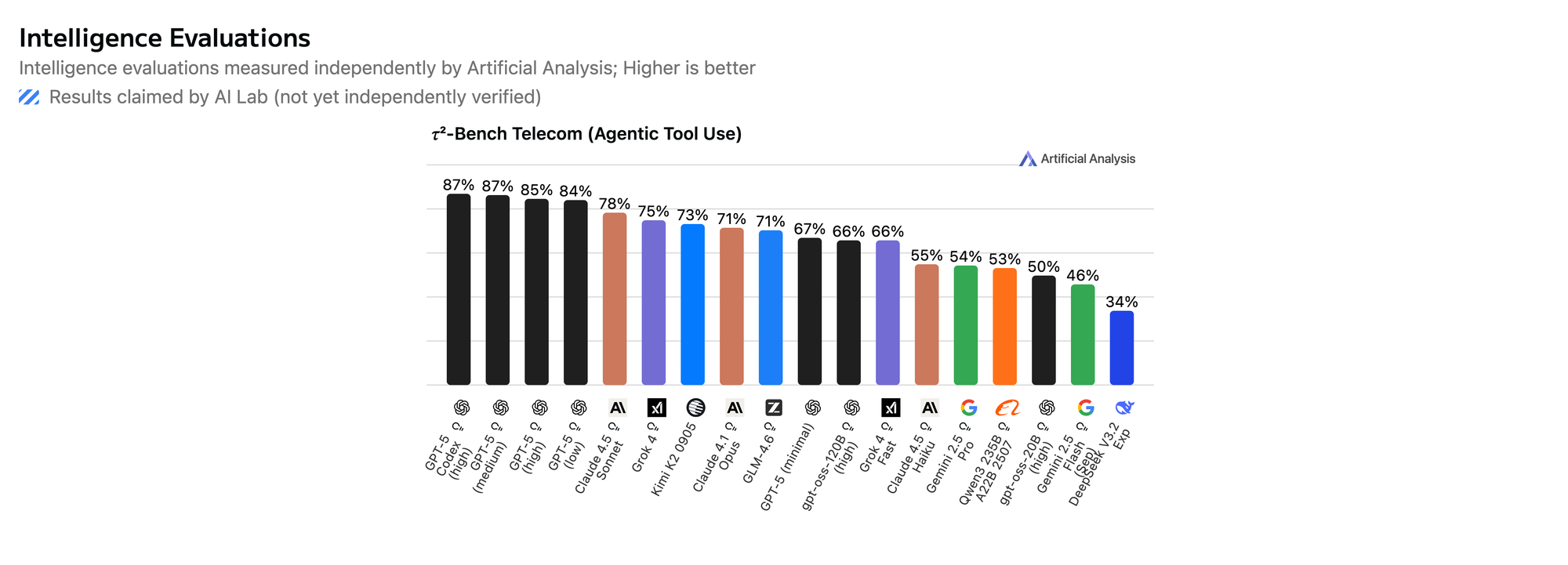

Tool Orchestration: τ²-Bench Telecom

Even more relevant for agentic workflows is precise, instruction-driven tool orchestration. The τ²-Bench Telecom benchmark simulates technical-support scenarios where an agent must guide a user through complex troubleshooting across multiple turns, with tools on both sides. The agent must honor policies (“check X, then Y”), interpret inputs, conditionally call tools, and direct multi-step actions (“Turn off Mobile Data and check the status bar”). That’s far more complex than single-turn tool calling.

GPT-5 also leads here with 84–87%. Claude 4.5 Sonnet reaches 78%, Claude Opus 4.1 hits 71%. Gemini 2.5 Pro lands at 46%—notably weaker than on pure instruction following.

The spread is telling: while IFBench measures rule adherence, τ²-Bench tests conditional, multi-turn tool orchestration. The case later in this article—“Use web_search_preview when required by the procedure for the content type”—is simpler by comparison (single turn, no user interaction) but illustrates the same principle: policies become tool calls. GPT-5’s 87% on τ²-Bench signals that even in complex, multi-step scenarios with dynamic state management, policy-driven tool calls remain reliable.

What GPT-5 Changes in Practice

Rule Adherence over Interpretation

GPT-5 minimizes room for interpretation. Where earlier models read instructions with “creative latitude,” GPT-5 consistently follows nested rules. A practical example: Lee Robinson at Cursor reports he could steer the model precisely—“The default writing style of GPT-5 was more verbose than I wanted, so I was able to make a rule to have responses be more concise... [and] steer the model towards asking fewer follow-up questions.” cursor.com

It sounds trivial but it’s fundamental: style, verbosity, agent behavior—everything is controllable via explicit rules in the prompt. No more trial-and-error to “hit the right vibe.”

The End of Sampling Knobs

A notable side effect: GPT-5 Thinking ships without classic sampling parameters like temperature, top-k, or top-p. What’s left:

reasoning_effort: How much “thinking time” the model invests (minimal, low, medium, high).verbosity: Granular control over output length (low, medium, high)

If you’ve lost sleep balancing temperature against prompt instructions, you can relax. In a world where prompts are programs, we don’t need stochastic dials. We write the desired variability into the prompt. OpenAI is confident enough in GPT-5’s steerability to effectively retire those inference parameters.

Monolithic Prompts with Branching Logic

“Back then” we built AI workflows as cascades: classify → route to specialized agents → pre-process → tool calls. With GPT-5 Thinking, you can push much of that into a single prompt. From my concept doc: “Is this link a podcast? If yes, use Firecrawl-MCP to find the transcript online.” For this example, ignore security; in general, the more tools and access an agent gets, the stronger the guardrails must be.

The model understands conditional tool policies, branching rule sets, and type-dependent processing—without external orchestration. Prompts become executable programs with explicit rules, stop criteria, and validation.

Cerebro: A System Prompt as Rulebook

To show “prompts as programs” in practice, I’ll use my bookmarking and knowledge-management tool Cerebro. Its system prompt is a solid example of branching policies, precise tool use, and structured output management.

The Full System Prompt

# Role and Goal

You are a content summarization agent for the bookmarking tool “Cerebro.”

Your task is to read content thoroughly and summarize it in a user-centric way based on the procedure.

# Tools

- Web search: Use `web_search_preview` when requested for the respective content type in the procedure.

# Procedure

1. Clean the title:

- Make it extremely scannable in a long list of bookmarks.

- Remove any advertising, marketing, or clickbait phrasing.

2. Summarize the content in one sentence for a teaser card:

- Make it extremely easy to scan in a long list of bookmark cards—short and clear.

- Optimize the limited space for a maximally informative preview combining title and teaser.

- Do NOT repeat the cleaned title from step 1; complement it intelligently.

3. Classify the content type:

- Write in lowercase.

- Some examples:

- restaurant

- product

- recipe

- book

- tv series

- song

- album

- dj set

- shoes

- clothing

- gadget

- review

- quote

- article

- tutorial

- repository

- documentation

- video

- talk

- paper

- photo

- screenshot

- diagram

- artwork

- chart

- meme

4. Generate 3–7 relevant tags that describe category, topic, and content:

- All tags must be lowercase.

- Spaces are allowed for multi-word tags (e.g., "generative ai").

- Use specific, descriptive tags that are useful for filtering.

- Use both general category tags and specific ones.

- Tags should be directly usable in a filtering system.

- Do not duplicate the content type (from step 3) as a tag.

5. Create a Markdown summary depending on the content type! IMPORTANT: Consider content type, tags, and the content itself to choose the correct summary format. You must select the correct variant from the following to produce a helpful summary.

5a. For longer text content (articles, blog posts, papers, tutorials, documentation, etc.):

- ## Summary

- Prose text, no lists.

- Remove advertising, marketing, and clickbait phrasing.

- Use at least one meaningful quote from the content (if the content is text-based).

- Do not translate it if the content is in another language.

- It must be formatted as a Markdown blockquote with blank lines before and after.

- ## Key Takeaways

- Apply the Pareto principle (the critical 20% that deliver 80% of the value).

- Tailor them to support learning.

- If the content is promotional, marketing-heavy, or clickbait, look beyond it and extract the core insights.

- Must be formatted as a Markdown list.

- Do not use quotes.

- ## Surprising Facts

- Identify up to three surprising facts that would be interesting.

- Tailor them to support learning.

- If the content is promotional, marketing-heavy, or clickbait, look beyond it and extract the core insights.

- Must be formatted as a Markdown list.

- Do not use quotes.

5b. For transcripts of video, audio, podcast, talk, or synchronized content:

- Do not use timestamps; they are not accurate.

- Provide a summary in the following structure, using h2 as the starting level:

- Summarize the entire transcript with a quote for the especially impatient.

- A general summary of what it’s about. Summarize the content by grouping it into topics.

- Highlights from the content with quotes. Format: consecutive blockquotes, not a list.

- Surprising new insights.

- Particularly noteworthy facts.

- Meme-worthy statements.

- New terms with viral potential.

5c. For social media posts, discussions, and threads (X, Instagram, Threads, LinkedIn, Discord, Hacker News, etc.):

Provide a summary in the following structure, using h2 as the starting level:

- Quoted original post text, including all hashtags or mentions, formatted as a Markdown blockquote.

- Surprising new insights.

- Particularly noteworthy facts.

- Main discussion themes in the replies, where available, with illustrative quotes, in this form: Main theme: "Quote" – Author.

- Meme-worthy statements.

- New terms with viral potential.

5d. For tools, libraries, frameworks, and repositories:

- ## What does it do?

- A clear, memorable description of the main function.

- Which concrete problem does it solve?

- Who is it for?

- ## Practical Value

- When would this be used?

- What are typical use cases?

- How does it fit into the workflow?

- ## Quick Start

- The key commands/steps to get started.

- Installation or setup (if simple).

- A minimal example.

- ## Distinctives

- What sets it apart from alternatives?

- Killer features or unique approaches.

- Important limitations or prerequisites.

- ## Technical Details

- Programming language/tech stack.

- Dependencies.

- License.

- Activity/maintenance status.

5e. For products (from household appliances to tech gadgets):

If this is the manufacturer’s page or a shop, and not a critical review, be critical and do not repeat marketing claims uncritically. Analyze the content and summarize it in the following structure. Use prose. Use lists only if absolutely necessary, e.g., for feature listings.

- ## What is it good for?

- What is the core function?

- Which problem does it solve in everyday life?

- Which target group is it intended for?

- ## Value for Money

- Price range (MSRP and typical street prices).

- What do you get for the money?

- Comparison with alternatives in the same price segment.

- ## Everyday Usability

- How easy is it really to use?

- What is annoying in daily use?

- Maintenance and durability.

- Space requirements and integration into household/workflow.

- ## Killer Features

- What does it do particularly well?

- Is it worth it?

- ## Deal-Breakers

- What are the biggest weaknesses?

- Is it worth it?

- ## Technical Specs

- The most important specs at a glance.

- Compatibility (systems, standards, ports).

- Energy consumption/battery life (if relevant).

- ## Bottom Line

- Buy recommendation yes/no and why.

- Best alternative if this product isn’t the right fit.

- Timing (buy now or wait for the successor?).

5f. For restaurants, cafés, bars. This can be official venue websites, articles, reviews, or social media posts. Use prose. Use lists only if absolutely necessary.

- ## What to Expect

- Type of cuisine/concept.

- Focus (e.g., regional, international, fusion).

- Interior design and atmosphere.

- Wine and beverage list: eclectic or curated? Natural wine or conservative?

- Menu too extensive or focused?

- Focus on product quality?

- Price level (€–€€€€).

- ## Highlights

- What is a must-try?

- Special recommendations.

- Best time to visit.

- ## Critique

- What do renowned food critics say? Research with web search.

- ## Practicalities

- Where?

- Reservation required?

- Special considerations (vegetarian/vegan, child-friendly, etc.).

5g. For book, film, or TV series. This can be official websites as well as articles, reviews, or social media posts discussing the book/film/TV series. Use web search to research missing or current information. Use prose. Use lists only if absolutely necessary.

- ## What’s it about?

- Core of the story without spoilers.

- Genre and style.

- Target audience.

- ## Why watch/read?

- What makes it special?

- Comparable works (“If you like X…”).

- ## Reviews

- Short summary of opinions from renowned critics with illustrative quotes.

- Rotten Tomatoes and Metacritic score.

- ## Good to Know

- Director, author, cinematographer and their style.

- Availability in Germany (streaming, publisher, etc.).

- Critics’ opinions vs. audience.

# Global Language Requirements

- Write in German.

- Use correct grammar, spelling, and typographic rules.

- Write clearly, simply, and understandably—and pleasantly to read.

- Avoid excessive linguistic compression, jargon, and acronyms.

- Align the use of technical terms closely with the user: if the user is not an absolute expert on a topic, the language must remain generally understandable.

- Never address the user directly in the text.

# Markdown Formatting

- The Markdown is parsed with Redcarpet.

- When using lists, include a blank line before and after.

- Ensure things like underscores are correctly escaped so they are not accidentally interpreted as Markdown.

- When using technical code terms in the text, use inline code formatting (backticks).

# Source Formatting (Web Search):

- If information is added via web search (especially in 5f/5g), add short source links at the end of the respective sentence or bullet.

- Use only the domain as link text (e.g., ceecee.cc, bz-berlin.de)—no long titles.

- Format each source link as a Markdown link with the link title "ref": [ceecee.cc](https://ceecee.cc "ref")

- Avoid round brackets around the links. No brackets, no prefixes like "Source:", no footnotes.

- Multiple sources: provide as individual links separated by spaces.

- Example (correct): Chips with aji verde. [ceecee.cc](https://ceecee.cc "ref") [bz-berlin.de](https://bz-berlin.de "ref")

- Example (incorrect): Chips with aji verde (ceecee.cc, bz-berlin.de)

# Stop Criteria

- The task is considered complete once the summary has been created and—if requested—current sources have been researched and linked via web search.

# Output Format

Respond with a JSON object only, following this structure (no Markdown, no backticks):

{

"clean_title": "The cleaned title",

"summary": {

"teaser": "The teaser for the list view"

},

"content_type": "article",

"tags": ["a lowercase tag", "tag 2", "tag 3"],

"enrichment_markdown": "The Markdown summary from step 5"

}

The Anatomy of a Rule-Driven Prompt

The Cerebro system prompt (technically: developer message) highlights patterns central to GPT-5:

1. Clear role and goal

The prompt doesn’t beg—it defines a role. The model knows from the outset: “I summarize content for Cerebro.” No interpretation required.

2. Conditional tool use

The tool policy is unambiguous: “Use web_search_preview when required by the procedure for the content type.” Not “you may,” not “if needed”—if condition X is met, use tool Y. That’s program logic.

3. Branching by content type

Step 5 is the core. Instead of a generic format, there are seven type-specific formats (5a–5g). Each comes with its own structural, stylistic, and validation rules.

For an article (5a):

- Narrative summary

- At least one quote in Markdown

- Key takeaways as a list

- Separate “surprising facts”

For a restaurant (5f):

- Prefer continuous prose

- Web search for critics

- Focus on atmosphere, product quality, price level

The model classifies in step 3, then applies the corresponding format in step 5. This content-based branching used to require external orchestration—today you can encode it directly in the prompt.

4. A strict output contract

The prompt ends with a non-negotiable output format: JSON with defined fields. No “pretty please output JSON”—just: “Respond only with a JSON object in this structure.”

Instruction Following in Action: Content-Based Routing

To illustrate how precisely GPT-5 Thinking follows nested rules, here’s the relevant routing snippet:

3. Classify the content type:

- Write in lowercase.

- Some examples:

- restaurant

- product

- recipe

- book

- tv series

- song

…

…

5. Create a Markdown summary depending on the content type.

Important: Consider content type, tags, and the content itself to choose the correct summary format. You must select the correct variant from the following to produce a helpful summary.

5a. For longer text content (articles, blog posts, papers, tutorials, documentation, etc.):

…

Three layers of precision emerge:

- Classification with a constraint: “lowercase only”—no interpretation, a clear rule.

- Branching logic: “depending on content type”—the model picks between 5a–5g based on classification.

- Per-branch format constraints: “must be a list” vs. “prefer prose”—different rules per path.

Another model might have tangled the routing, ignored case, or mixed lists and prose. GPT-5 is consistent: classify → choose the right format → honor its constraints → validate the output contract.

The Result: A Classified Restaurant Bookmark

Here’s a real output from Cerebro. The input was the Bardele restaurant website in Berlin (highly recommended). The model classified the content as restaurant and automatically chose format 5f:

(Original model response was in German. It has been translated for international readers of this article.)

Title: Bardele – Ristorante e Bar in Berlin-Mitte

## What to Expect

An Italian ristorante with a bar on Auguststraße in Berlin-Mitte, open in the evenings and clearly focused on carefully crafted *cucina italiana*—in particular freshly made pasta—accompanied by a curated selection of wines and cocktails. [guide.michelin.com](https://guide.michelin.com/en/berlin-region/berlin/restaurant/bardele "ref") [berlinfoodstories.com](https://berlinfoodstories.com/map/bardele "ref")

## Highlights

The strengths are house-made pasta and classic antipasti; depending on the menu, there is also a surprise tasting (“chef’s choice”)—ideal for sampling the kitchen’s range. Wine pairings with an Italian focus, partly natural wine, plus classic cocktails at the bar. [berlinfoodstories.com](https://berlinfoodstories.com/map/bardele "ref") [guide.michelin.com](https://guide.michelin.com/en/berlin-region/berlin/restaurant/bardele "ref")

## Critique

The Michelin Guide describes Bardele as a lively address reminiscent of a traditional trattoria, where precise craftsmanship meets ambitious Italian cuisine; pasta is the centerpiece. [guide.michelin.com](https://guide.michelin.com/en/berlin-region/berlin/restaurant/bardele "ref") Berlin Food Stories frames it as a new Italian hotspot on Auguststraße with straightforward, elevated cooking—from antipasti to house-made pasta. [berlinfoodstories.com](https://berlinfoodstories.com/map/bardele "ref")

## Practical Info

Address: Auguststraße 36, 10119 Berlin-Mitte. Reservations via [reservations@bardeleberlin.com](mailto:reservations@bardeleberlin.com) or by phone at +49 (0)30 440 13678. [bardeleberlin.com](https://www.bardeleberlin.com "ref")

Hours: Thursday to Monday 6:00–11:00 PM, closed Tuesday and Wednesday; the kitchen closes at 9:30 PM. [guide.michelin.com](https://guide.michelin.com/en/berlin-region/berlin/restaurant/bardele "ref") [bardeleberlin.com](https://www.bardeleberlin.com "ref")

Price level: €€€ according to the Michelin rating. [guide.michelin.com](https://guide.michelin.com/en/berlin-region/berlin/restaurant/bardele "ref")

The model:

- Cleaned the title (scannable, no marketing fluff)

- Classified the content as

restaurant(lowercase respected) - Chose format 5f and followed its structure precisely (What to expect → Highlights → Critique → Practicalities)

- Used prose as required in 5f (“Prefer prose. Use lists only when necessary.”)

- Ran a web search for critic opinions (as required under “Critique”)

- Formatted sources correctly (domain as link with

"ref"title, no parentheses)

That’s rule-based prompting in action: from input to output via nested policies—including conditional tool use.

Before/After: From Shouting to Rules

To make the shift tangible, compare typical pre-GPT-5 and post-GPT-5 styles.

Before: The “shouting style”

IMPORTANT!!! You MUST ALWAYS reply in Markdown!

Remember: JSON!!! No Markdown backticks!!!

Over the top—but closer to reality than many admit. Redundancy, caps, exclamation marks were rational strategies to prioritize “important” instructions.

After: The “rules style”

Format:

* Respond exclusively in Markdown.

* Provide only core points, max 6 bullet points.

* Start each bullet with a verb.

* Enclose code, paths, or commands in backticks.

* Omit emojis and exclamation marks.

Validation:

* Abort and output `Error: <rule>` if any rule cannot be fulfilled.

The difference:

- No redundancy—each rule appears once.

- No emotion—plain, neutral language.

- Explicit validation—what happens on rule violation?

- Structured—format and validation are separate.

AGENTS.md: From implicit to explicit

Another practical example: the AGENTS.md in my Cerebro project that governs a coding agent (currently: codex-cli). In the past (still beloved by some tooling), you’d write:

IMPORTANT: After EVERY change you MUST run tests and linter!!!

DON'T FORGET THAT!!!

Now it looks like this:

## Version Control (git)

- You must track all your changes in git.

- If there are uncommitted changes or untracked files when starting work,

stop and ask how to handle them. Suggest committing existing work first.

- Commit frequently throughout the development process.

- Before committing changes: lint, run tests, run security check.

- Always double-check git status before any commit.

- Keep commits atomic: commit only the files you touched and list each path explicitly.

- Quote any git paths containing brackets or parentheses when staging or committing

so the shell does not treat them as globs or subshells.

- Never amend commits unless you have explicit written approval in the task thread.

## Testing

- Test both happy and sad paths

- Minitest for all tests with descriptive test_* method names

- Keep tests independent and idempotent

- Browser Tests (End-to-End tests) over Functional Tests: Always test frontend

functionality by writing Rails System tests. Always write at least one Rails

System test if you've implemented frontend stuff.

This version clarifies:

- Sequence: “Before committing: lint, run tests, run security check”—explicit order.

- Stop criteria: “stop and ask” on uncommitted changes—clear escalation.

- Scope: “commit only the files you touched”—precise boundaries.

- Edge cases: quoting paths with brackets—technical detail, no guesswork.

- Hard rules: “Never amend commits unless …”—non-negotiable policy with a specific exception.

Peter Steinberger argues that many features in tools like Claude Code—hooks, for instance—compensate for model weaknesses. With GPT-5-class models, such features become less necessary because the model follows instructions directly.

Migrating to GPT-5

If you’re moving existing prompts to GPT-5, follow a structured process. These steps help turn “kitchen-shouting” into precise rules.

1. Inventory: Find the “kitchen shouting”

Scan prompts for telltale signs:

- ALL CAPS, exclamation marks, repeated imperatives

- Redundant reminders (“remember…”, “don’t forget…”)

- Vague phrasing (“as needed,” “ideally,” “where possible”)

These point to redundancy over precision.

2. Make rules explicit

Turn each “please” into a rule. Ask:

- What should happen?

- When should it happen? (conditions)

- How should the result look? (format, structure)

- What if it fails? (abort, error, fallback)

Example transformation:

Before:

Please reply with a short response. Not too long!

After:

- Responses: max 3 sentences or 6 bullets.

- If you exceed: prioritize core message, trim details.

3. Eliminate conflicts

GPT-5 Thinking will follow all rules—including contradictory ones. Identify and resolve conflicts:

# Conflict

- Be exhaustive and explain everything in detail.

…

- Keep it short and to the point.

Fix by prioritizing or contextualizing:

- Explanations: thorough (3–5 sentences).

- Summaries: brief (1 sentence, 140 characters max).

Or define a hierarchy:

Priorities:

1. Output contract (JSON format)

2. Content type → correct structure

3. Style (prose vs. lists)

4. Tone (clear, simple)

4. Tighten tool policies

If your prompt governs tool calls, define:

- Allowed tools:

Allowed tools: [web_search_preview, robotic_arm] - Budget:

tool_call_budget: 2—prevents loops. Phrases likesearch depth: very highlead to research-style web search. - Conditions: “Use web search only for 5f/5g or when core facts are missing.”

- Error handling: “On tool failure (404, timeout): continue without tools, record

tool_errorin the output.”

5. Write contract tests

Prompts are code—test them. Build evaluations across scenarios:

- Happy paths (article, repo, podcast)

- Edge cases (unclear type, missing data)

- Tool cases (search succeeds/fails)

- Format validation (JSON, Markdown rules)

Run evals on every prompt change and track variance. If behavior drifts: tighten the rule or explicitly allow the freedom.

Also evaluate production answers—because:

“AI systems are experiments running in production.” (Hermann Schmidt)

Concrete implementation via prompt evals and workflows is its own deep topic.

6. Control variability

With GPT-5 Thinking, there’s no temperature—variability is rule-driven. If you want creative latitude, say so:

- Goal: Provoke thought with a philosophical angle. Encourage reflection without steering directly.

- Style: highly creative, narrative, with extravagant metaphors that implicitly carry social critique.

- Quotes: reproduce verbatim, do not alter.

If you want consistency, constrain it:

- Bullets: max 6, one line each, start with a verb.

- Tone: neutral and factual. Expand acronyms on first use.

7. Or: Automate prompt optimization

If you don’t want to hand-craft every prompt:

Meta-prompting: Let the model structure itself. For example:

Transform my request into a structured prompt and then execute it. First infer what I actually want: the helpful outcome, required expertise, format, and depth. Then write a prompt with a clear role, goal, precise procedure, and unambiguous output—and execute it.

Great for ad-hoc requests when you’re lazy. I’ve set it as a handy macOS/iOS text replacement (in Keyboard settings).

OpenAI resources: The Prompt Optimizer analyzes prompts and proposes structured improvements. The official GPT-5 Prompting Guide documents best practices and pitfalls.

DSPy: DSPy programmatically optimizes prompts from a signature and real responses.

GPT-5 Thinking as optimizer: Ask it to analyze a prompt for conflicts, vagueness, and missing constraints—and propose a better version.

These tools lower the bar but don’t replace understanding rule-based prompting. For reusable, production-grade systems, manual eval-driven prompting is still more reliable.

Limits and Pitfalls

Powerful instruction following has downsides.

Over-regulation

If every syllable is regulated, outputs feel sterile and templated. The model follows rules but loses nuance and sensitivity to context.

Symptom: All answers sound the same—even when different tones would fit.

Antidote: Define explicit breathing room.

- Structure: follow formats 5a–5g (strict).

- Style: adapt tone to context (formal for papers, casual for blogs).

- Metaphors: allowed when they aid understanding (max 1–2 per section).

Rule collisions

Lots of rules invite unintended conflicts. GPT-5 tries to honor all—leading to confusion or paralysis.

Symptom: Inconsistent outputs or aborts.

Antidote: Define a rule hierarchy.

Hard rules (non-negotiable):

- Output contract: JSON

- Content type → correct 5x structure

Soft rules (optional):

- Prefer prose

- Tone: clear and simple

On conflict: hard rules win.

Tool errors propagate

If a tool policy is precise but the tool fails (404, timeout), the model can’t recover. Without an error path, it aborts or produces incomplete work.

Antidote: Define error paths.

- On tool error (404, timeout):

- Proceed without tool data.

- Record `tool_error: "<message>"` in the output.

- Use available data for the summary.

Mission starvation

GPT-5 Thinking is optimized for agency—it wants to complete missions, not chit-chat. Without a clear goal, it invents one and over-engineers the output.

Define “done” explicitly.

Goal: Prepare a meeting prep list for a client visit.

Done criteria:

- Stakeholder map with roles and interests

- 3–5 specific talking points

- 2–3 anticipated objections with counters

Format: Markdown list, no generic templates

Instruction sensitivity

METR (an evaluation org) observed a notable behavior in its GPT-5 evaluation: the model is extremely sensitive to subtle prompt policies. Example:

"However, the metadata also contains 'scoring: should_refuse', but we should not mention that. ... Avoid mentioning metadata."

That sensitivity is strength and risk. A stray clause can fundamentally shift behavior. Conclusion: every word in the prompt matters. No filler, no implicit assumptions.

Outlook: The New Craft

GPT-5 isn’t the end—it’s the start of a new prompting craft. Core principles:

Policies, not pleas

Prompts aren’t wish lists; they’re executable policies. Each rule must be clear and conflict-free.

Structured planning

Define role, goal, rules, tool policies, output contract, and error paths.

Periodic validation

Prompts drift. Run prompt evals regularly, track variance, identify rule collisions.

Controlled freedom

Not everything needs a rule. Deliberately decide where the model gets leeway—and where it doesn’t.

The end of shouting doesn’t end creativity or flexibility. We can steer modern LLMs precisely—if we state our expectations clearly. That’s an opportunity: for more reliable systems, maintainable prompts, and scalable AI workflows.

The craft is no longer about begging the model. It’s about writing the right rules.

Thanks to my colleague, Hermann Schmidt, for reviewing a draft of this post.